Visualising AI

Partnering with Google Deepmind, I was thrilled to be trusted with visualising two of their concepts, in the 4th round of a project they call Visualising AI.

Deepmind: Visualising AI aims to open up conversations around AI. By commissioning a wide range of artists to create open-source imagery, the project seeks to make AI more accessible. It explores the roles and responsibilities of the technology, weighing up concerns and societal benefits in a highly original collection of artist works.

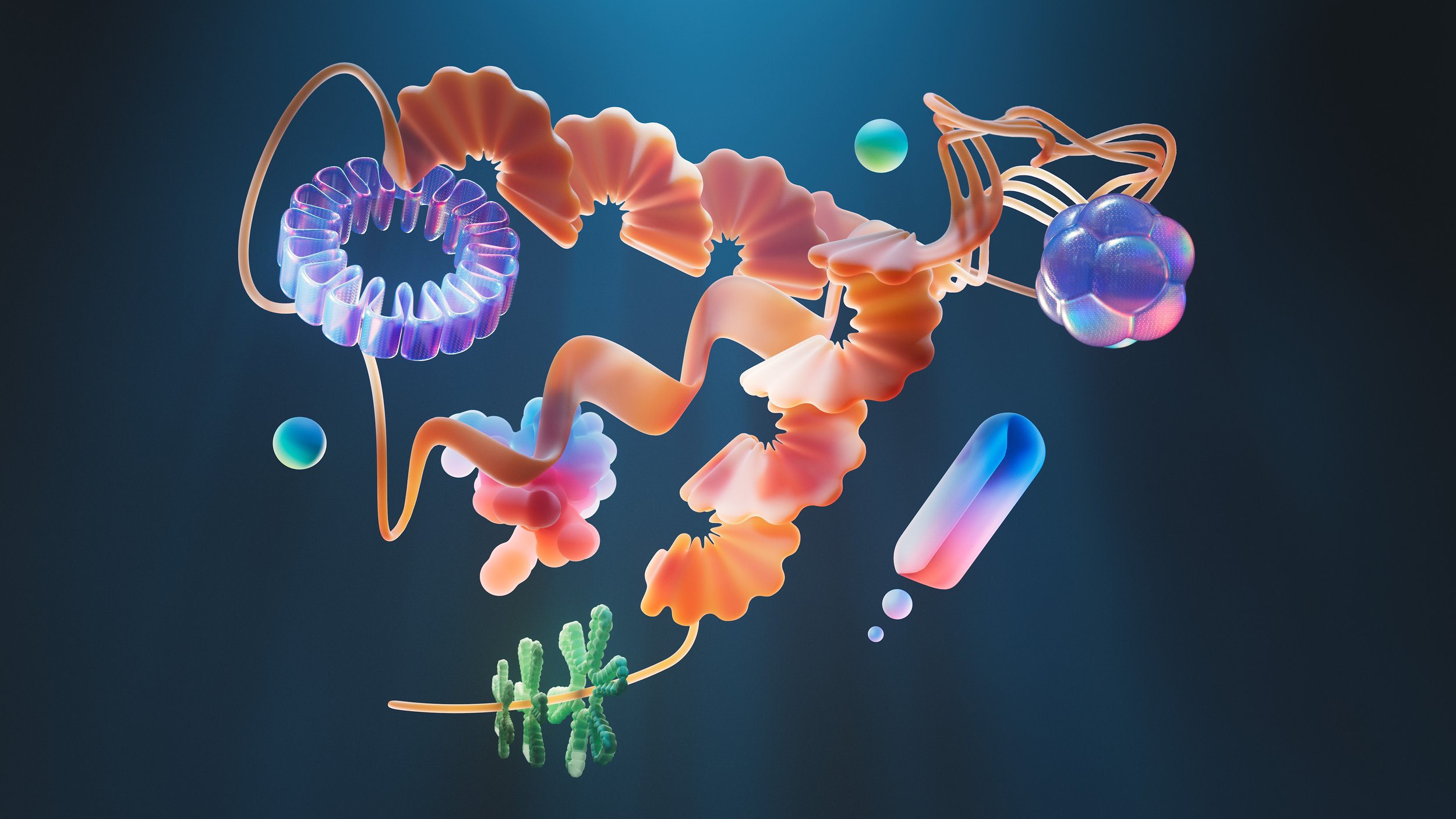

First concept was visualising AI for biology. For this, main goal was visualisation of a process of their AlphaFold system. AlphaFold is an AI system developed by Google DeepMind, that predicts a protein's 3D structure from its amino acid sequence. Bellow is my interpretation of the process.

Second concept was visualising Multimodality. Multimodality is a representation of data using information from multiple such entities, often with multiple representations, which can be an image, a piece of audio, a piece of text or other forms. Bellow is my interpretation of the concept.

Designed, directed & produced: Twistedpoly

Music & Sound Design: Marko Lavrin

Making of

Bellow is a short making of video.

Project walktrough / techical breakdown also available on Gumroad.